A full featured Markdown editor with live preview and syntax highlighting. Supports GitHub flavored Markdown.

See the change log for changes and road map.

🖍 Formats: Markdown, todo.txt, csv, ics, ini, json, toml, txt, vcf, yaml 📋 Copy to clipboard: Copy any text, including text shared into Markor 💡 Notebook is the root folder of documents and can be changed to any location on the filesystem. An Apache Spark cluster on HDInsight. See Create an Apache Spark cluster. Create a Jupyter Notebook. Jupyter Notebook is an interactive notebook environment that supports various programming languages. The notebook allows you to interact with your data, combine code with markdown text and perform simple visualizations. First of all you need to link your django static file to the apache web server and to do that you need to add a service on your docker-compose.yml for the django part. After that using volume you can link the django output as an input for apache. For more details thanks to follow this example. A full featured Markdown editor with live preview and syntax highlighting. Supports GitHub flavored Markdown. See the change log for changes and road map. Powered by Markdig - the best markdown parser. There's an apache config file on the repo (might not be properly updated yet) to wrap plain markdown on the fly, if you prefer not writing in html files. Share Improve this answer.

Features

- Powered by Markdig - the best markdown parser

- Syntax highlighting

- Live preview window with scroll sync

- Mermaid chart support

- CommonMark and GitHub flavored Markdown

- High-DPI support

- Drag 'n drop of images supported

- Paste image from clipboard directly onto document

- Outlining/folding of code blocks

- Keyboard shortcuts

- Light Bulbs

- Brace completion with type-through

- Lightning fast

- Auto-generate HTML files

Syntax highlighting

All fonts can be changed in Tools -> Options -> Environment -> Fonts and Colors dialog.

GitHub and other flavors

Advanced markdown extensions are supported to give more features to the syntax. This includes pipe tables, emoji, mathematics and a lot more.

Live Preview Window

The preview window opens up on the right side of the document when it opens.

Every time the markdown document is modified, the preview window will update.

Any code blocks receives full syntax highlighting in the preview window. Here's an example of JavaScript code rendered.

The preview window is automatically scrolled to match the scroll position of the document. As the document is scrolled up and down, the preview window will follow.

Live preview can be disabled in the settings.

The syntax highlighter is powered by Prism

Custom stylesheets

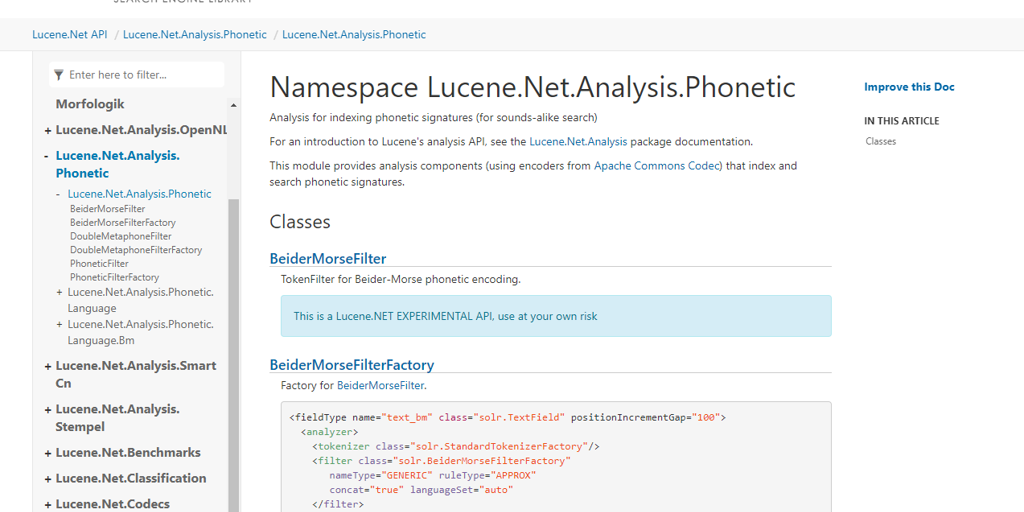

Apache Markdown

The preview window supports loading of custom stylesheets. It will look for a file called md-styles.css in the same directory as the currently opened markdown file or any parent directory.

If no md-styles.css file is found it will look for it in %userprofile%. If it still isn't found it will use the built in stylesheet.

To generate a custom stylesheet, simply right-click inside the markdown editor document and select Add Custom Stylesheet...

The name of the custom stylesheet can be changed in the settings.

Drag 'n drop images

Drag an image directly from Solution Explorer onto the document to insert the appropriate markdown that will render the image.

Paste images

This is really helpful for copying images from a browser or for inserting screen shots. Simply copy an image into the clipboard and paste it directly into the document. This will prompt you for a file name relative to the document and then it inserts the appropriate markdown.

It will even parse the file name and make a friendly name to use for the alt text.

Outlining

Apache Markdown Handler

Any fenced code and HTML blocks can be collapsed, so that this:

...can be collapsed into this:

Keyboard shortcuts

Ctrl+B makes the selected text bold by wrapping it with **.

Ctrl+I makes the selected text italic by wrapping it with _.

Ctrl+Shift+C wraps the selected text in a code block.

Ctrl+Space checks and unchecks task list items.

Tab increases indentation of list items.

Shift+Tab decreases indentation of list items.

Ctrl+K,C wraps the selection with HTML comments.

Ctrl+K,U removes HTML comments surrounding the selection/caret.

Ctrl+PgUp moves caret to previous heading

Ctrl+PgDown moves caret to next heading

Light Bulbs

The suggested actions shown in light bulbs makes it easier to perform common tasks.

For instance, converting the selected text to a link will result in this:

Auto-generate HTML files

By right-clicking any Markdown file in Solution Explorer, you can turn on automatic generation of a HTML file.

It will wrap the output rendered markdown in a HTML template that looks like this:

You can provide your own HTML template by dropping a file with the name md-template.html in the same or parent folder to the markdown file. Just make sure to include the [title] and [content] tokens in the template.

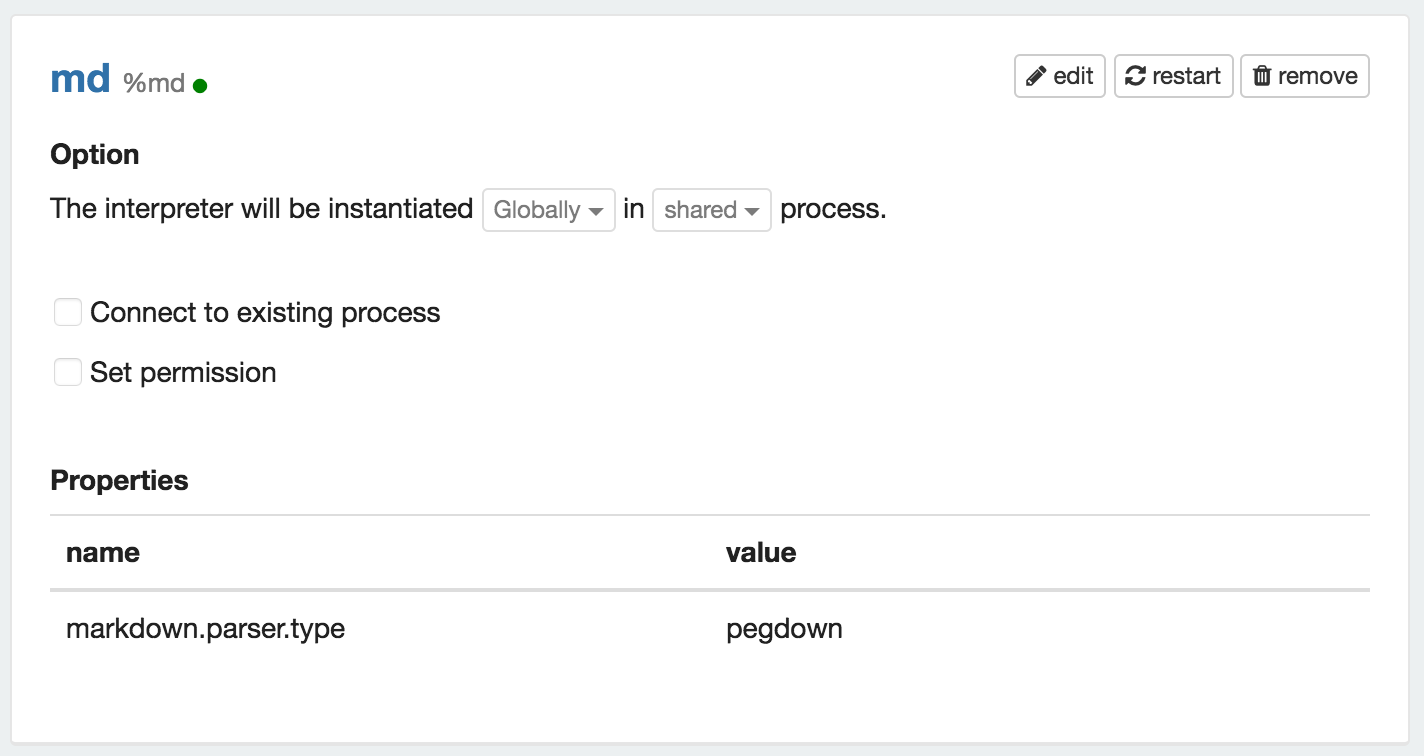

Settings

Control the settings for this extension under Tools -> Options -> Text Editor -> Markdown

Contribute

Check out the contribution guidelines if you want to contribute to this project.

For cloning and building this project yourself, make sure to install the Extensibility Tools 2015 extension for Visual Studio which enables some features used by this project.

License

-->In this tutorial, you learn how to create a dataframe from a csv file, and how to run interactive Spark SQL queries against an Apache Spark cluster in Azure HDInsight. In Spark, a dataframe is a distributed collection of data organized into named columns. Dataframe is conceptually equivalent to a table in a relational database or a data frame in R/Python.

In this tutorial, you learn how to:

- Create a dataframe from a csv file

- Run queries on the dataframe

Prerequisites

An Apache Spark cluster on HDInsight. See Create an Apache Spark cluster.

Create a Jupyter Notebook

Jupyter Notebook is an interactive notebook environment that supports various programming languages. The notebook allows you to interact with your data, combine code with markdown text and perform simple visualizations.

Edit the URL

https://SPARKCLUSTER.azurehdinsight.net/jupyterby replacingSPARKCLUSTERwith the name of your Spark cluster. Then enter the edited URL in a web browser. If prompted, enter the cluster login credentials for the cluster.From the Jupyter web page, Select New > PySpark to create a notebook.

A new notebook is created and opened with the name Untitled(

Untitled.ipynb).Note

By using the PySpark kernel to create a notebook, the

sparksession is automatically created for you when you run the first code cell. You do not need to explicitly create the session.

Create a dataframe from a csv file

Applications can create dataframes directly from files or folders on the remote storage such as Azure Storage or Azure Data Lake Storage; from a Hive table; or from other data sources supported by Spark, such as Cosmos DB, Azure SQL DB, DW, and so on. The following screenshot shows a snapshot of the HVAC.csv file used in this tutorial. The csv file comes with all HDInsight Spark clusters. The data captures the temperature variations of some buildings.

Paste the following code in an empty cell of the Jupyter Notebook, and then press SHIFT + ENTER to run the code. The code imports the types required for this scenario:

When running an interactive query in Jupyter, the web browser window or tab caption shows a (Busy) status along with the notebook title. You also see a solid circle next to the PySpark text in the top-right corner. After the job is completed, it changes to a hollow circle.

Note the session id returned. From the picture above, the session id is 0. If desired, you can retrieve the session details by navigating to

https://CLUSTERNAME.azurehdinsight.net/livy/sessions/ID/statementswhere CLUSTERNAME is the name of your Spark cluster and ID is your session id number.Run the following code to create a dataframe and a temporary table (hvac) by running the following code.

Run queries on the dataframe

Once the table is created, you can run an interactive query on the data.

Run the following code in an empty cell of the notebook:

The following tabular output is displayed.

You can also see the results in other visualizations as well. To see an area graph for the same output, select Area then set other values as shown.

From the notebook menu bar, navigate to File > Save and Checkpoint.

If you're starting the next tutorial now, leave the notebook open. If not, shut down the notebook to release the cluster resources: from the notebook menu bar, navigate to File > Close and Halt.

Clean up resources

With HDInsight, your data and Jupyter Notebooks are stored in Azure Storage or Azure Data Lake Storage, so you can safely delete a cluster when it isn't in use. You're also charged for an HDInsight cluster, even when it's not in use. Since the charges for the cluster are many times more than the charges for storage, it makes economic sense to delete clusters when they aren't in use. If you plan to work on the next tutorial immediately, you might want to keep the cluster.

Open the cluster in the Azure portal, and select Delete.

You can also select the resource group name to open the resource group page, and then select Delete resource group. By deleting the resource group, you delete both the HDInsight Spark cluster, and the default storage account.

Next steps

In this tutorial, you learned how to create a dataframe from a csv file, and how to run interactive Spark SQL queries against an Apache Spark cluster in Azure HDInsight. Advance to the next article to see how the data you registered in Apache Spark can be pulled into a BI analytics tool such as Power BI.